What is the best UI technology: Angular or Blazor ?

(When you build enterprise business apps using DotNet)

Introduction

This post compares and explains in detail the pros and cons of the 2 most used SPA frameworks by .net developers, Angular and Blazor.

My customers are enterprises that use .Net for building their line of business applications. I compared these 2 UI technologies, to find which one is the best fit for them and when they should use them instead of traditional ASP.NET MVC.

This study is primarily based on my many years of experience in architecting and developing solutions based on Angular and .NET. It is based on research over the internet and the feedback of other architects and IT consultants. Because I had no formal experience with Blazor I invested time in studying the Blazor framework and in building some small applications with Blazor. I also interviewed other developers that had experience with Blazor and integrated their feedback into this study.

Single Page Applications

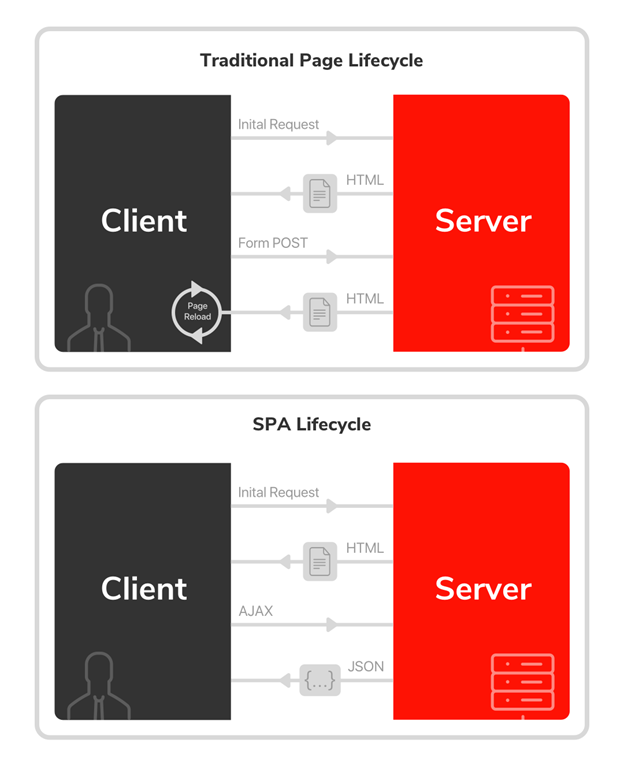

There are two general approaches to building web applications today: traditional web applications also called Multi Page Applications (MPA’s) because they are made of multiple pages. These perform most of the application logic on the server. On the other hand, you have single-page applications (SPAs) that perform most of the user interface logic in a web browser, communicating with the web server primarily using web APIs. A hybrid approach is also possible, the simplest being to host one or more rich SPA-like sub-applications within a larger traditional web application.

MPA reloads the entire page and displays the new one when a user interacts with the web app.

A SPA is more like a traditional application because it’s initially loaded and then it refreshes part of the page without reloading it. When a user interacts with the application, it displays content without the need to be fully updated since different pieces of content are downloaded automatically as per request. It is possible thanks to AJAX technology. In single-page applications, there is only one HTML page, and this one page downloads a bunch of assets, CSS, and images but typically also a lot of JavaScript. Speaking of the latter, the code will then listen to clicks on links and then re-render parts of the DOM in the loaded page whenever a user needs something.

Although SPA applications are extremely popular because they usually account for a better user experience it does not mean that every web application should be built with a SPA architecture. Let’s take, for instance, a news portal like CNN. It is a good example of a multi-page application. How can we see it? Just click any link and watch the reload icon on the top of your browser. You see that reloading has started because a browser is now reaching out to our public server and fetching that page and all the resources needed. The interesting thing about multi-page applications is that every new page is downloaded. Every request we send to the server, like whenever we type a new URL or click on a link, leads to a new page being sent back from the server. Notable examples of multiple-page applications are giants like Amazon or eBay. Using them, you always get a new file for every request.

ASP. Net Core MVC is an excellent choice for websites that are better developed as MPAs (see later). But as explained here above SPAs usually supplies a better user experience when our application is more like an interactive application.

Angular

Angular still is by far the most used SPA framework by .NET developers. This is because it has a lot of similarities with the .Net ecosystem.

- TypeScript: Angular use TypeScript by default. This means that all documentation, articles, and source code are provided in TypeScript. TypeScript is a superset of JavaScript that compiles JavaScript. C# and Typescript are both designed by Anders Hejlsberg, and in many ways, TypeScript feels like the result of adding parts of C# to JavaScript. They share similar features and syntax, and both use the compiler to check for type safety. So, it's more straightforward to learn TypeScript for a C# dev as JavaScript.

- MVVM: Angular uses the MVVM pattern. This Pattern was invented for WPF & Silverlight (.NET). So many developers coming from .NET that wanted to do Web development have turned to Angular because it used the same pattern.

- All in the box: Unlike other Js frameworks like Vue or React, Angular is a complete framework that tends to supply everything you need, libs, and tooling and spare you of the dependency hell so that you can focus on your application. Just like the .NET framework.

Most of the other Js frameworks are exactly the opposite. They only focus on one aspect but leave you with a lot of choices. Although focusing on one thing results in frameworks that are simpler to learn, .NET enterprise developers are annoyed when they must make a lot of choices. They prefer opinionated frameworks that help them in getting productive faster with better supportability.

Angular is a part of the JavaScript ecosystem and one of the most popular SPA frameworks today. AngularJs the preceding version, was introduced by Google in 2009 and was largely adopted by the development community.

In September 2016, Google released Angular 2. It was a complete rewrite of the framework by the same team. The recent version of the framework uses TypeScript as a programming language. TypeScript is a typed superset of JavaScript which has been built and maintained by Microsoft. The presence of types makes the code written in TypeScript less prone to run-time errors. As explained here above, TypeScript is the primary reason Angular is so popular in the .NET community.

The difference between the old Angular and the second version is so radical that you can’t update from AngularJs (the older version). Migrating the applications to Angular requires too many modifications due to different syntax and architecture. This means that upgrading our existing AngularJs apps to the new Angular framework will lead to a complete rewrite.

TypeScript compiles JavaScript and integrates perfectly with the JavaScript ecosystem. So Angular is part of the JavaScript ecosystem and uses npm to manage dependencies. It can count on the largest community and number of libraries today. With Angular, you use the largest open-source ecosystem where you can find everything that you need.

But the vast number of packages and their fast-changing rate is also a challenge in the enterprise. Making these packages available is the biggest challenge. Take a new Angular application as a sample. An empty new Angular application has the following direct dependencies:

- @angular

- @angular/cli

- @ngrx

- rxjs

- typescript

- tslint

- codelyzer

- zone.js

- core-js

And now let’s install all the dependencies by typing:

npm i

... one eternity later ...

added 1174 packages in 116.846s

A new regular Angular application without any add-on uses already 1200 packages!

When your ecosystem has this number of dependencies, you can’t inspect them all yourself. You can’t hope they’re all secure. You can’t assume they’ve all got permissive licenses. You can’t maintain your registry by hand. You can’t formally approve packages by comity, and you can’t maintain this as many packages you’re using will get an update every single hour. You must move from manual, discrete processes to automated, continuous processes.

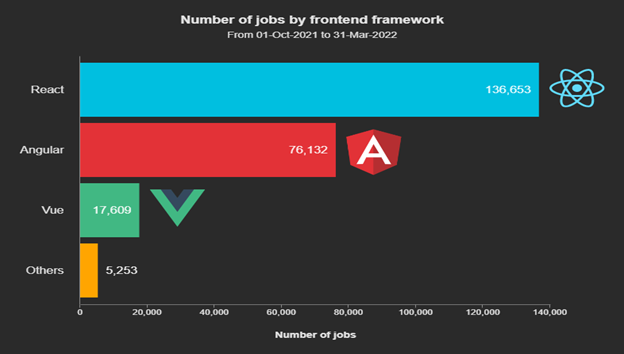

Angular comes with great tools, supported by a large community. Angular has been around for over a decade, while Blazor has been in the market for only a few years. Angular is a production-ready framework with full support for MVVM applications and it is being used by many large companies. Blazor, while being used by some large brands, is early in its lifecycle. Angular and other popular Js frameworks like Razor or View come with much more content in the form of courses, books, blogs, videos, and other materials. Angular has dedicated tech events held worldwide, a huge community, and it supplies a broad choice of third-party integrations.

To illustrate the difference in adoption, consider the graphic here that compares the number of jobs offered by frontend technology. Angular comes second when Blazor is somewhere between the others:

As illustrated here above, despite being one of the oldest and one of the most mature SPA frameworks Angular is not the most used or loved framework in the JavaScript community. This is primarily due to his complexity. Even for seasoned JavaScript developers, Angular is difficult to master. His strength of being an opinionated framework using TypeScript comes at the expense of a steep learning curve.

This is even more challenging for most .NET developers who usually have only a marginal knowledge of JavaScript. They not only have to learn a new language they also must learn an entirely new ecosystem and framework. In my own experience, this is the greatest challenge for organizations using .NET. This can be solved by recruiting Angular specialists that focus on the frontend part. Having a frontend specialist usually improves the user experience but it comes at the expense of having to segregate your devs in frontend (Angular) and backend devs (.NET).

Mixing two technologies coming from different worlds diminishes the flexibility of resource allocation. People must specialize and the potential number of tasks they can work on diminishes. It also increases development time as you need to put more effort into integrating both technologies.

My own experience with working with .net developers having to learn Angular confirms that the learning curve is very steep for them. In the past, to cope with this problem, we recruited Angular specialists to coach our .Net teams. We also had to invest in our CI/CD pipeline to support our new technology stack. As a .Net shop that desired to adopt Angular, we had to make a considerable investment in recruiting the right profiles, supplying the right guidance, and investing in building and adapting our package management process and tools to the high velocity and number of packages that are typical for the Javascript ecosystem. Also recruiting the right skills was a challenge for the reasons explained here above and because Angular is somewhat losing traction in the Js community.

When to choose Angular over ASP.NET MVC?

If the team is unfamiliar with JavaScript or TypeScript but is familiar with pure server-side MVC development, then they will probably be able to deliver a traditional MVC App more quickly than an Angular app. Unless the team has already learned Angular or unless the user experience afforded by Angular is needed, the traditional MVC is the more productive choice.

Blazor

Blazor is a relatively new Microsoft ASP.NET Core web framework that allows developers to write code for browsers in C#. Blazor is based on existing web technologies like HTML and CSS but then allows the developer to use C# and Razor syntax instead of JavaScript. Razor is the template markup syntax used by ASP.NET MVC and MVC developers can reuse most of their skills to build apps with Blazor.

Blazor is very promising for .NET developers as it enables them to create SPA applications using C#, without the need for significant JavaScript skills.

An advantage of Blazor is that it uses the latest web standards and does not need added plugins or add-ons to run in different deployment models. It can run in the browser using WebAssembly and offers a server-side ASP.NET Core option.

Learning Blazor for .NET developers is nothing compared to Angular or other Js frameworks. First, they don’t need to master JavaScript and TypeScript but also, but they can use the enterprise-friendly .NET ecosystem they already know. For a .NET dev already accustomed to web development through MVC, we can say that developers can be productive in a matter of days/weeks compared to months/years with Angular.

But the biggest advantage of Blazor is its productivity. It allows .NET developers to build entire applications with only C#. It feels more like building desktop apps than web apps. When using the server-side version developers don’t have to think about data transfer between client and server. Also, with the client-side version, it’s a lot easier than with Angular as it’s all integrated within one .NET solution.

Blazor seems even more productive than traditional MVC as it doesn’t require the developer to cope with server page refreshes and can use client rendering and events. It feels like what was developing with Silverlight or WPF but using Razor, Html, and CSS instead of Xaml. This was also confirmed by our discussion with a consultant at Gartner. They confirmed that based on experiences with several customers, Blazor was the most productive technology for building UIs inside the .NET ecosystem, even compared to MVC.

The adoption of Blazor outside the .NET community will probably remain low. Blazor is hard to sell to the current web developer because it means leaving behind many of the libraries and technologies that have been up for over a decade of modern JavaScript development. This means that Blazor will probably stay a niche technology. Blazor is and will be used by teams that know .NET for building internal business applications but will probably not be adopted outside this community. That means that the community and tools will mostly be supported by Microsoft. The tool and libraries support from the open-source community and vendors will remain less compared to Angular or other mainstream Js frameworks.

One of the impediments to Blazor adoption by the .NET community is that Blazor will be the next Silverlight. They fear that it will be around only for a brief period. More than 10 years after the final Microsoft Silverlight release, some developers still fear being 'Silverlighted,' or seeing a development product in which they have invested heavily abandoned by Microsoft. This is because both technologies are similar, both are developed by Microsoft and allow us to use the .NET platform to create web applications. They both share the same core idea: Instead of using JavaScript, we can use C# as the programming language to create web applications.

Inside the enterprise, this risk is largely mitigated by the fact that Microsoft has always supplied relatively long support periods. Even for Silverlight Microsoft continued to support it for nearly 10 years before retiring it. This risk has also a lot less impact as Blazor is not dependent on a browser plugin. Microsoft learned a lot from this story. First, ASP.NET and later ASP.NET Core do not require any browser plugins to run. Also, Microsoft decided to only use open web standards for Blazor. As long as the browsers support those open web standards, they will also support Blazor.

Client Side (Wasm) vs. Server Side Blazor

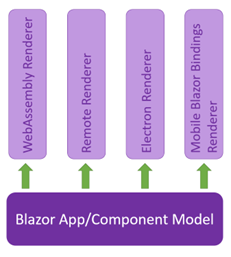

Blazor has a separation between how it calculates UI changes (app/component model) and how those changes are applied (renderer). This decouples the renderer from the platform and allows to have the same programming model for different platforms.

Currently, Microsoft supports only 2 renderers, Blazor client (WebAssembly Renderer) and Blazor Sever (Remote Renderer). The implementation code is the same but what differs is the configuration of the hosting middleware. A good practice is to isolate your code from changes to the hosting model by using a class library where you put all the rendering code. This will ease the job if you later must migrate to another hosting model or when you need to support another supplementary model.

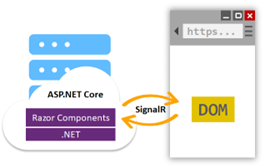

Blazor Server

With Blazor Server you use a classic ASP.NET Core website like ASP.NET MVC but the UI refreshes are handled over a SignalR connection.

As for a SPA, one page is displayed on the browser and then parts of it are refreshed. On the server at the first request, the Html is generated from the Razor pages. When UI updates are triggered by user interaction and app events a UI diff is calculated and sent to the client over a SignalR connection. Then, the client-side Js library used by Blazor server applies the changes in the browser.

Using Blazor Server behind load balancers or proxy servers requires special attention. As described in the article here, forwarded headers should be enabled (default configuration in IIS) and the load balancers should support sticky sessions. You can also use Redis to create a backplane as described here.

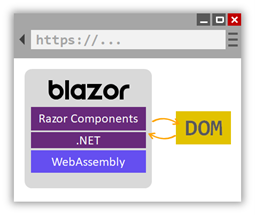

Blazor Client (WebAssembly)

Here .Net code is running inside the browsers. The application code is compiled in WebAssembly (a sort of Assembly language for the web) and sent together with the .Net runtime to the browser. The Blazor WebAssembly runtime uses JavaScript interop to handle DOM manipulation and browser API calls.

WebAssembly is an open web standard and is supported by all modern web browsers. Blazor is not the only technology using WebAssembly, most of the other big vendors develop tools and platforms that make use of WebAssembly.

Blazor Server vs. client recommendation

For traditional enterprise business applications, Blazor Server seems usually the best option but for certain scenarios, Blazor Wasm has clear advantages :

- When the responsiveness of your app must be optimal, the low latency of Blazor server will optimize the responsiveness.

- You need offline support.

- You expect many users (>100). Blazor Server is a less scalable solution as many users require server resources to handle multiple client connections and client states.

| |

Weight

|

Blazor Server

|

Score

|

Total

|

Blazor Client

|

Score

|

Total

|

|

Learning curve

|

5

|

Easier to configuration because security model.

|

8

|

40

|

More complex, same problems as with SPA but not significantly harder as server side Blazor.

|

7

|

35

|

|

Productivity

|

5

|

Enable to build 2 tier apps that demand less effort as 3 tier apps.

|

9

|

45

|

Enforce 3 tier and use of API.

|

7

|

35

|

|

Supportability

|

5

|

The same as traditional MVC apps.

|

9

|

45

|

More difficult to support due to security model.

|

7

|

35

|

|

Maturity

|

4

|

First version with production support.

|

7

|

28

|

Came later but now it's supported in production by Microsoft.

|

7

|

28

|

|

Community

|

3

|

For the moment adoption seems better.

|

6

|

18

|

WebAssembly has highest buzz so probably the most adoption in the long run.

|

7

|

21

|

|

Enforce Best practice

|

3

|

Does not enforce 3 tiers or MVVM.

|

4

|

12

|

Enforce 3 tiers, using 3 tiers is usually a good practice.

|

8

|

24

|

|

Performance

|

2

|

Better performance at load time but a lot less scalable.

|

6

|

12

|

Longer load time but a lot more scalable.

|

7

|

14

|

| |

|

|

|

|

|

|

|

|

Risk

|

Impact

|

|

|

|

|

|

|

|

Security

|

4

|

No more risk as a classical MVC.

|

0

|

0

|

Higher risk for misconfiguration.

|

-1

|

-4

|

|

Lifecycle support

|

5

|

No dependency on browser support.

|

0

|

0

|

Browsers need to continue to support WebAssembly standard, but the probability is low.

|

-1

|

-5

|

|

|

|

|

|

|

|

|

|

|

Total (Max 360)

|

|

|

|

200

|

|

|

183

|

When to choose Blazor over ASP.NET MVC ?

As developing or maintaining apps using Blazor is more productive than ASP.NET MVC and easy to learn, Blazor is usually the best choice except when:

- Your application has simple, possibly read-only requirements.

Many web applications are primarily consumed in a read-only fashion by most of their users. Read-only (or read-mostly) applications tend to be much simpler than those applications that maintain and manipulate a great deal of state. For example, our intranet or public websites where anonymous users can easily make requests, and there is little need for client-side logic. This type of public-facing web site usually consists mainly of content with little client-side behavior. Such applications are easily built as traditional ASP.NET MVC web applications, which perform logic on the web server and render HTML to be displayed in the browser. The fact that each unique page of the site has its own URL that can be bookmarked and indexed by search engines (by default, without having to add this functionality as a separate feature of the application) is also a clear benefit in such scenarios.

- Your application ecosystem is based on MVC and is too small or too simple to invest in Blazor.

When your applications consist mainly of server-side processes and your UI is quite simple (e.g., Dashboard, back-office CRUD apps, …) and you’ve already made a strong investment in MVC then adding Blazor could not be worth the investment.

Angular vs. Blazor Score Card

Here we selected several criteria and attributed weight to the function of the specific needs and environment of Enterprises using .Net as their main technology stack. But, each organization is unique and adapt the scoring based on its specific needs.

The weight ranges from 1 to 5. 5 indicates an especially important criterion, starting from 1 as minor importance to 5 as the top priority.

The score for each criterion ranges from 0 to 10. 10 shows an exceptionally high-quality attribute, 1 is the minimum.

We also take account of Risks at the bottom of the scorecard. Here the weight indicates the risk potential impact and the score the probability. Staring from 0 as 0% probability to -10 indicates a risk that will occur with 100% probability.

- Learning curve: the amount time a developer, on average, needs to invest to become productive with the technology.

- Productivity: how productive the developers are with the given platform once it has mastered the technology.

- Supportability: how well can we efficiently support the platform?

- Community: how valuable, responsive and broad is the developer's community? When a platform has a strong community the organization, its developers and its users all benefit.

- Maturity: is the platform used for long enough that most of its first faults and inherent problems have been removed or reduced?

- Full Stack efficiency: how efficient is it for the project to work and to cope with the separation of the frontend and the backend? Does the adoption of this technology diminish the efficiency of the project teams and the organization because they must deal with different skill sets for backend and frontend?

- Finding resources: how easy is it to recruit developers on the market?

- Libraries choices: a good library well suited for the needs and well maintained improves productivity while minimizing the TCO. A large choice of libraries increases the probability of finding a library that satisfies the needs.

- Opinionated: a platform that believes a certain way of approaching a problem is inherently better and enables the developer to spend less time finding the right way of solving a problem.

- Performance: which platform has the fastest render time, load time, and refresh time, and consumes a minimal number of resources?

| |

Weight

|

Blazor

|

Score

|

Total

|

Angular

|

Score

|

Total

|

|

Learning curve

|

5

|

Easy to learn for .Net devs, especially with MVC/Razor experience. Non-included features require Js integration (limited).

|

9

|

45

|

Steep learning curve even for experimented Js devs.

|

3

|

15

|

|

Productivity

|

5

|

More tuned for RAD as Angular, even more productive as bare MVC (Gartner)

|

9

|

45

|

Lot of ceremony but good tooling is provided (e.g., Visual Studio Code).

|

4

|

20

|

|

Supportability

|

5

|

Included in. Net6 & Visual Studio, Server Blazor Server is same as MVC web app towards supportability. (Blazor server is included in .Net 6 => not a Nuget package)

|

9

|

45

|

Big challenges with lifecycle due to number of packages.

|

2

|

10

|

|

Community

|

4

|

Backed by Micrsosoft but small community made out exclusively of .NET devs.

|

3

|

12

|

Backed by Google and exceptionally large community.

|

8

|

32

|

|

Maturity

|

4

|

Is supported since .NET core 3.1. and is considered by community as mature since .NET 6.

|

6

|

24

|

Very mature & battle tested.

|

9

|

36

|

|

Full Stack efficiency

|

3

|

Although some devs specialize in backend or frontend work, Blazor is an integrated environment with 1 stack where 1 dev can easily work on both with high efficiency.

|

9

|

27

|

Some Angular/.NET real full stack devs are available. Experience shows that when 2 different stacks (.NET & Angular) are used this often leads to a segregation of devs in frontend and backend devs what diminish efficiency.

|

4

|

12

|

|

Finding resources

|

3

|

Finding experienced Blazor devs is more difficult as finding Angular devs. Nevertheless, for MVC devs, learning Blazor is easy.

|

4

|

12

|

Angular is one of the mainstream Js frameworks but a lot of JS frameworks exist and finding Angular devs that know .Net is not easy.

|

6

|

18

|

|

Libraries choices

|

3

|

Limited choice of Open-source library. Major vendors Teleric or DevExpress have Blazor component UI libraries.

|

3

|

9

|

Large choice open source and commercial libraries.

|

8

|

24

|

|

Opinionated

|

2

|

Although it's a full framework with databinding, Devs have more choices to make as with Angular.

|

7

|

14

|

Angular is very opinionated and complete.

|

8

|

16

|

|

Performance

|

2

|

Less performant but still very acceptable

|

7

|

14

|

Framework was tuned over the years towards performance.

|

8

|

16

|

| |

|

|

|

|

|

|

|

|

Risk

|

|

|

|

|

|

|

|

|

Abandon technology within 5J

|

4

|

Taking Ms lifecycle into consideration the chance is extremely low for 5Y.

|

-1

|

-4

|

Chances are low but AngularJs has proven that even when the tech is successful vendors can abandon it.

|

-1

|

-4

|

|

Adoption falls within the community

|

3

|

The probability is low but exists that .NET devs don't continue to adopt the tech due to "Silverlight" history.

|

-2

|

-6

|

Angular has a large community but it's losing traction.

|

-1

|

-3

|

|

Total (Max 360)

|

|

|

|

237

|

|

|

192

|